NN Advanced Optimization

There are better optimization functions to minimize cost than Gradient Descent

Remember DG is expressed as

So a way to make it more efficient would be to have it self-evaluate and adjust the size of alpha (the learning rate). We can use the Adam algorithm.

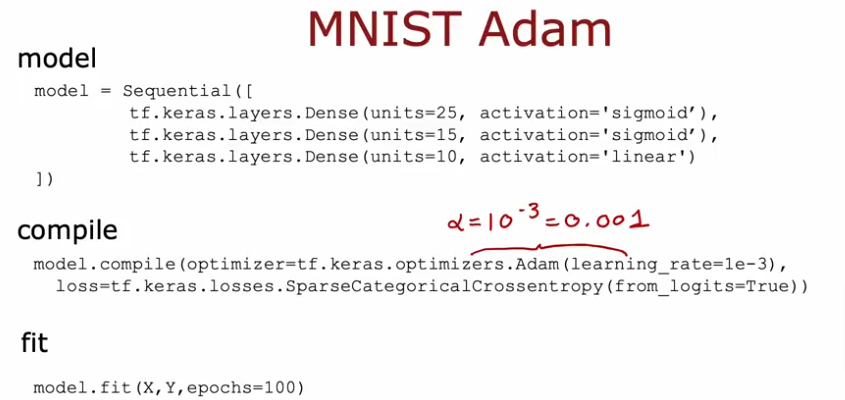

Adam Algorithm

Adaptive Moment Estimation - Adam uses different learning rates for every parameter. So if it is moving in the same direction the algorithm increases alpha, and if it’s bouncing back and forth it will decrease it.

It needs an initial global learning rate to start, so it’s always good to adjust your initial learning and see the effects.

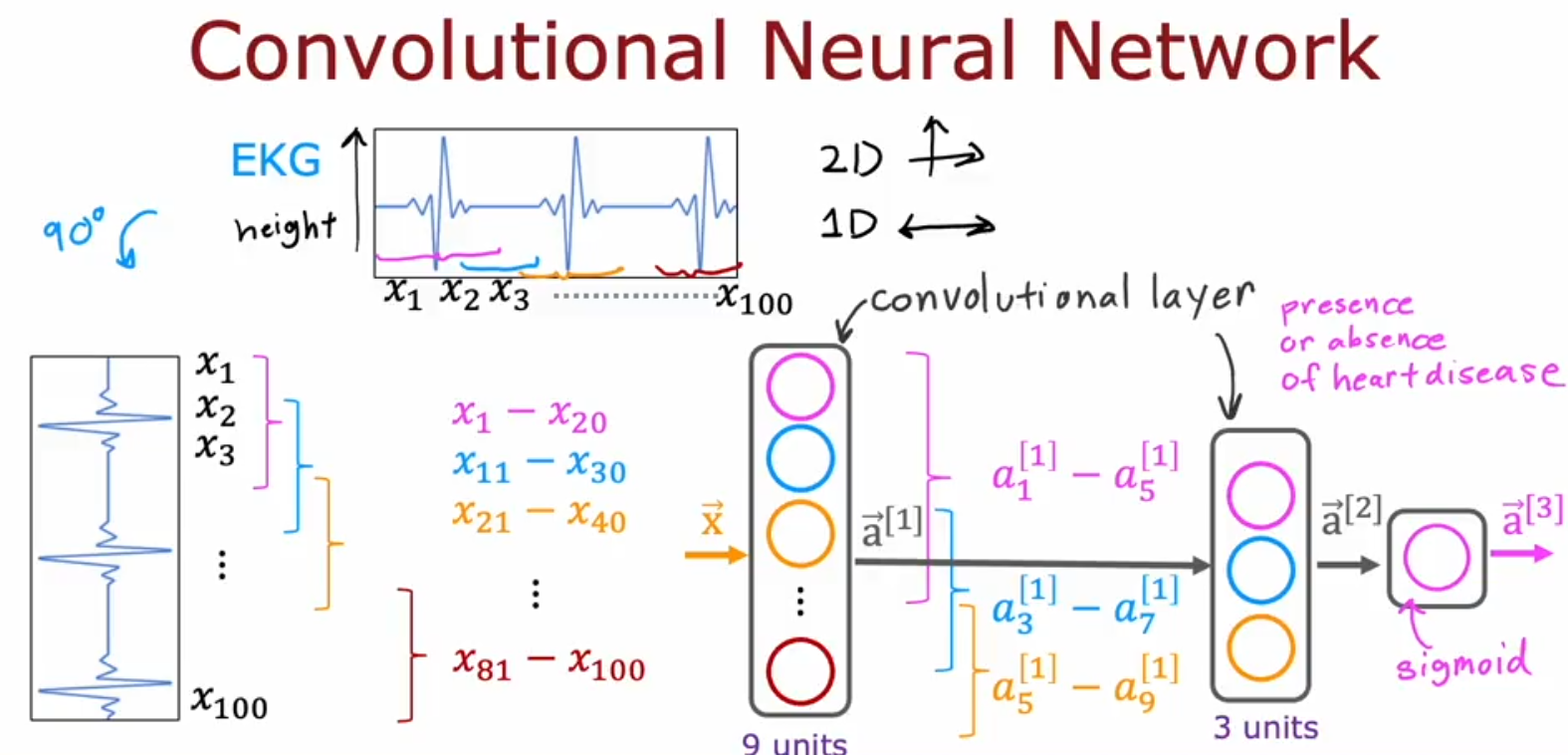

Convolutional Layers

Instead of using the conventional layers where the output of one is the input of another. We can now have each neuron to look at a specific region of an image and so on.

- It is faster

- Needs less training data

- Decreases overfitting

- Convolutional Network

For example using EKG as input,

- we flip the image sideways and assign each neuron to scan part of the image this way we can process all neurons simultaneously

- the first layer let’s say has 9 units and each unit is responsible for part of the image

- the next layer can have another convolutional layer and each unit in it will have part of the previous layer, and the second unit another part…

- the final layer will be sigmoid activation layer which will make the binary determination whether the EKG image indicates heart disease or not