from xgboost import XGBClassifier

model = XGBClassifier()

model.fit(X_train, y_train)

y_pred = model.predict(X_test)Random Forest Algorithm

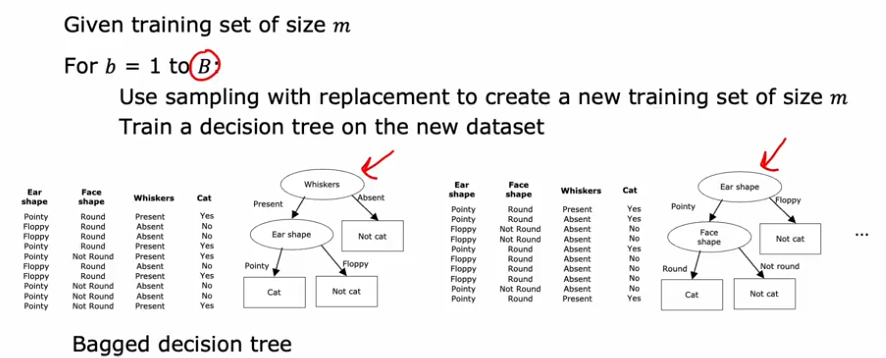

In order to generate new training sets for the tree ensemble which contains several DT and putting all the training samples and trees in a bag is called Bagged decision tree = B

- Here you see that the main node has changed

- Many times the node is the same even for newly replaced samples

- B does help if large but going over 100 becomes more time consuming than helpful

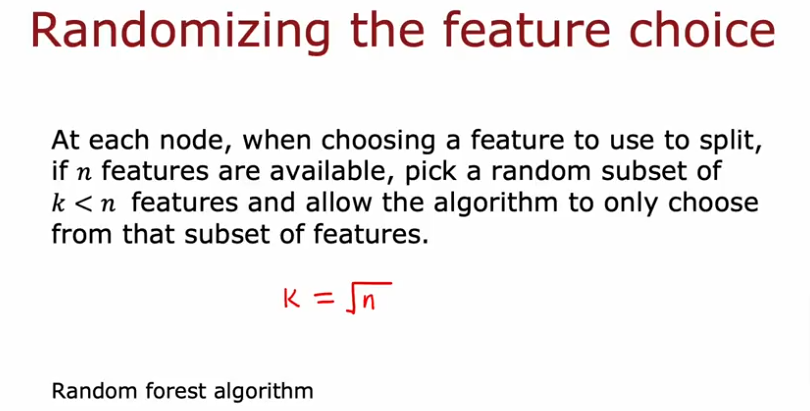

- We usually end up with any small changes to the dataset not making much of a change on the DT so we end up using the RFAlgorithm shown below

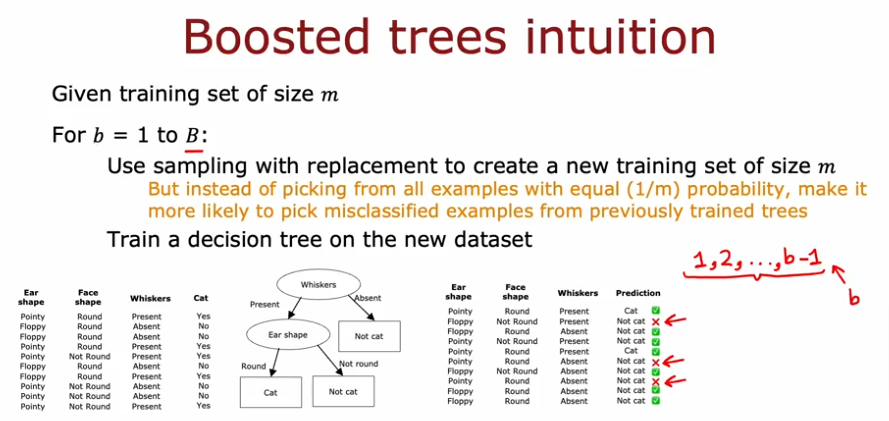

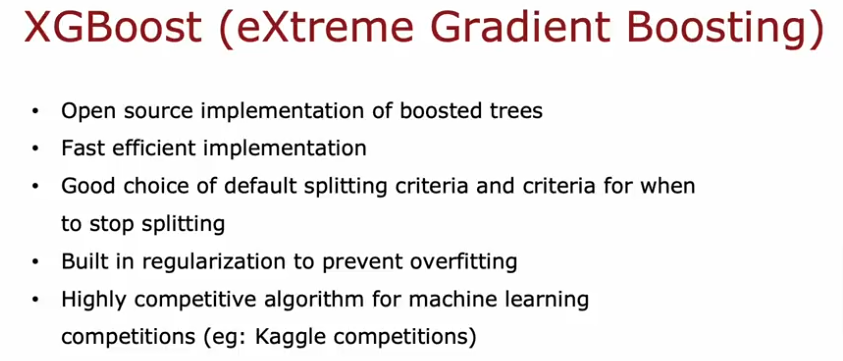

XGBoost

XGBoost is a RFAlgo that is used most commonly. It edits the previous algorithm by focusing the attention on the data that we didn’t get right the first, second, third time…. and so on. Like if we know how to do 10 things out of 13 why keep practicing the 10 things we know how to do, instead focus on practicing the 3 things over and over again, till we are better at it

XGBoost assigns weight to the sampled data instead of eliminating it

Code

Classification

.

Regression

from xgboost import XGBRegressor

model = XGBRegressor()

model.fit(X_train, y_train)

y_pred = model.predict(X_test)