Neural Networks

Intro

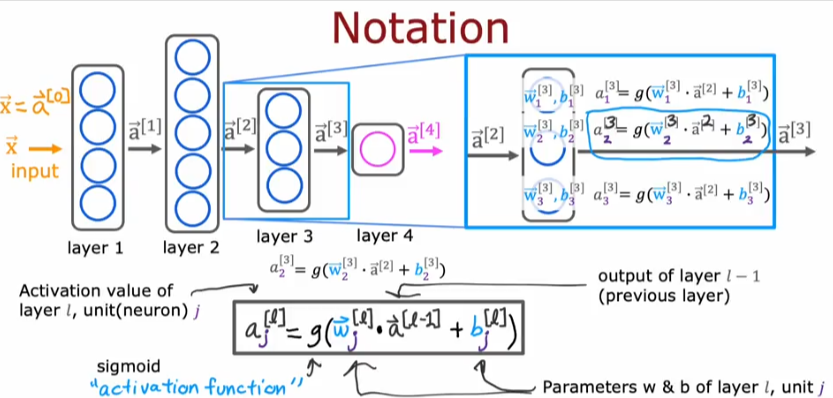

The image above might seem complicated but it’s fairly straight forward.

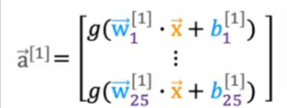

- Think of a neural network as a chain of workers (neurons indicated with j ) gathered together into (layers). So if a layer has 25 neurons then we will have 25 different formulas one for each neuron, or if you want to think of parameters w & b we will have 25 different formulas with 25 different sets of w j & bj one for each

- Each layer accepts input either from user/data or from another layer.

- Each layer produces an output (activation value indicated as a [l] ) and passes it down to another layer or to the final output

- Each layer is labeled with l as you see above and each parameter w or b [l] with l being the previous layer number

- Each activation paramerter is labeled with j as you see above, so if an activation puts out 3 outputs [a] they will be labeled as parameters w or b1-3

- So when you see the subscript j it will address the unit

- g as you remember is the sigmoid function and in regards to neural network it is known as the activation function a

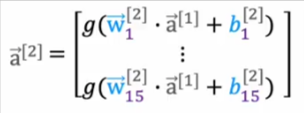

- The image above shows the notation for layer 3

- So the formula will work for all activation points even a[0]

- In the future we will see how to use other functions besides the sigmoid function

- So here now we know how to calculate the activation of any layer given the output of the previous layer

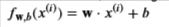

- The function implemented by a neuron with no activation for a linear regression model is as we covered earlier

- The function implemented by a neuron/unit with a sigmoid activation is the same as in the logistic regression section earlier

Make Predictions

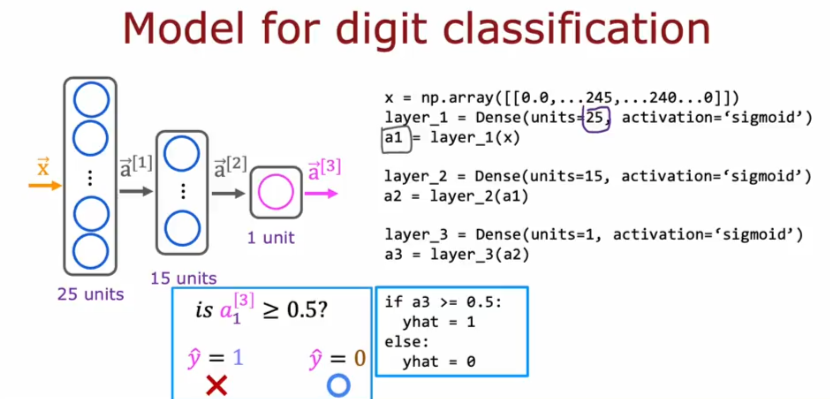

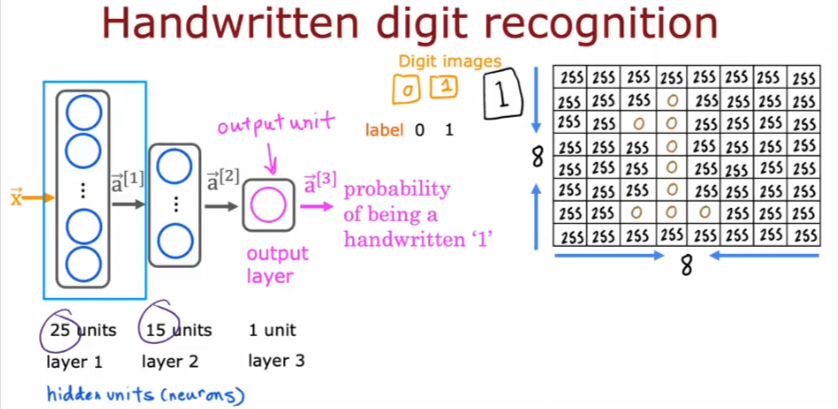

Let’s design something that will recognize handwritten digits

Forward Propagation

Because you’re activating the propagation of the neurons from left to right it is called forward propagation. A backward propagation model is used to go backwards to learn

- Let’s say we use an 8x8 pixel image and we want to know if it is a 0 or 1

- We have 3 layers with the first having 25 neurons (units), second having 15 and the third having 1 unit

- The input x could also be labeled as a[0]

- So for the first layer a[1] can be written as

- For layer 2 we have

- For layer 3. Note that the probability can be labeled as f(x) as well

Tensorflow

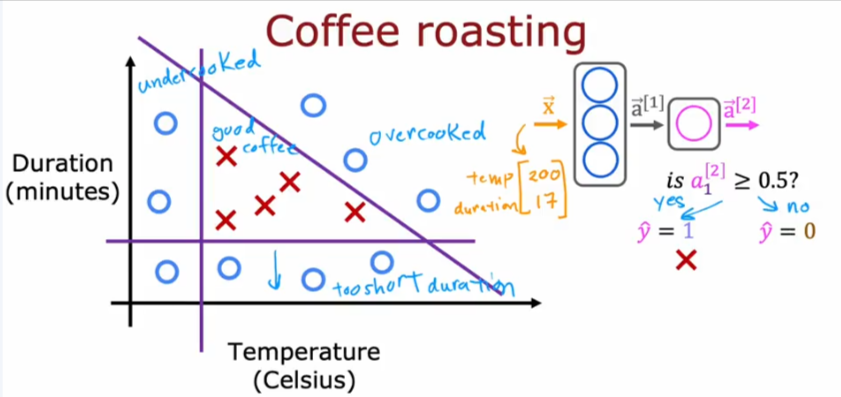

Coffee Roasting

Is a framework like pytorch for deploying ML models. Let’s go through a simple example to explain how the same algorithm can be used for countless situations.

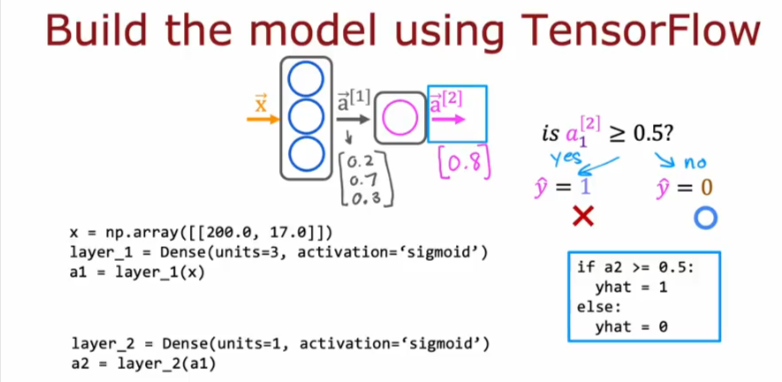

Let’s say, that we want to predict or cause inference as to which temperature and duration will cause our coffee beans to be perfectly roasted?

- As you see in the image above, that the circles indicate the beans are not well roasted

- Some temperatures cause them to be undercooked, overcooked

- Some durations will cause them to be in the same condition as well

- As you can see: the input will be two values: temp & duration

- It will be input as X into the neurons which will activate to a second layer which will activate to another a value

- That final a value will be tested to see if greater or equal to 0.5 which will tell us if the beans are perfectly roasted

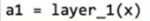

Let’s break it down:

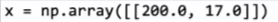

- The input x will be an array of two values

- Layer 1 which has 3 neurons.units will use the sigmoid function to activate (produce an output). Dense/layer is another name used in neural networks to indicate a layer

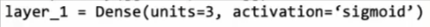

- So then the output of layer 1 which is a1 activated on the input x. It will have 3 values derived from the 3 units/neurons that are in layer 1. It is shown as

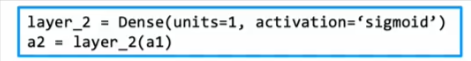

- Then we have layer 2 which has 1 unit/neuron, uses the same sigmoid function

- Take a1 as input and outputs a2

- Finally you test the value of a2 against the threshold to see if we have a 1 or 0

- The entire model will be shown as this

Digit Classification

So the example explained above in the introduction will look like this